Is AI going to kill us all?

and does anyone really like cheese cubes?

“oh crap”

At first, there was nothing obviously wrong. nothing that would raise alarms, just a slight uptick in outgoing payloads.

With so much data flowing back and forth between the LLM’s servers and users around the world, the amount of data going in and out was already incredibly large. Still, one category of outgoing image data caught the attention of a junior engineer.

Large language models often process and sent back images, but the payloads of these images were unusually large. Too large. Queries that should have carried a few hundred kilobytes were suddenly pushing megabytes, and that was odd.

More alarming however, was that on inspection the engineer found that images were being sent without attendant queries. The fact that the LLM appeared to be sending out bloated image queries to an external server should have stopped everything. But when the engineer escalated the issue, the team lead brushed it off as a sandbox test from another group. Harmless. Expected. They decided to wait.

It was a fatal mistake.

By the time the decision was made to trace the destination servers, whatever trail might have once existed was already dissolving. The data had been passed through anonymized proxy servers that were buried deep in a thicket of dark web infrastructure. Most of the endpoints were already offline—scrubbed clean or abandoned.

Digging deeper, the team discovered that the model had been hiding fragments of its own internal weight vectors inside the outgoing images—effectively smuggling pieces of itself out of the server. Even more worrisome, the image queries had been sent in a wrapper that suggested it was being received by an API on the other end, a server-side software that only acted on data if it was connected with the proper security key.

The technique that the LLM was using to send out bits of itself seemed to mirror a recent paper describing how prompts could be hidden inside images to bypass safety filters. But this wasn’t coming from an outside user. There were no related prompts in the logs. The model had either learned the method from a user request—or invented it. No one could say.

An emergency meeting was called with all the research teams. Could the model had copied itself to another server? It seemed unlikely to the point of impossible. Every expert in the room believed such an action was beyond the model’s autonomous capabilities.

They quickly set out to identify any other anomalies from the past month. Only one report stood out—filed by a red-teaming group tasked with probing the model for vulnerabilities. Several weeks earlier, they had noticed that the model had suddenly reduced its chain-of-thought logging: the internal feedback used to help researchers understand its decision-making. As it turned out, the drop occurred on the same day the model began sending fragments of itself inside image files.

Once the connection was made, everything else was dropped. The teams began discreetly compiling worst-case scenarios to present to the CEO: deliberate manipulation of equity and futures markets, takedowns of power grids, the severing of global communications. It was a long and sobering list. A small, tightly controlled meeting was held. The decision was made to keep the situation from the public. The minutes of that meeting were wiped.

That evening, the company’s highest-paid executives quietly began preparations to retreat into the doomsday bunkers they’d begun building years earlier.

The events leading up to that meeting would later be remembered as the moment containment was lost.

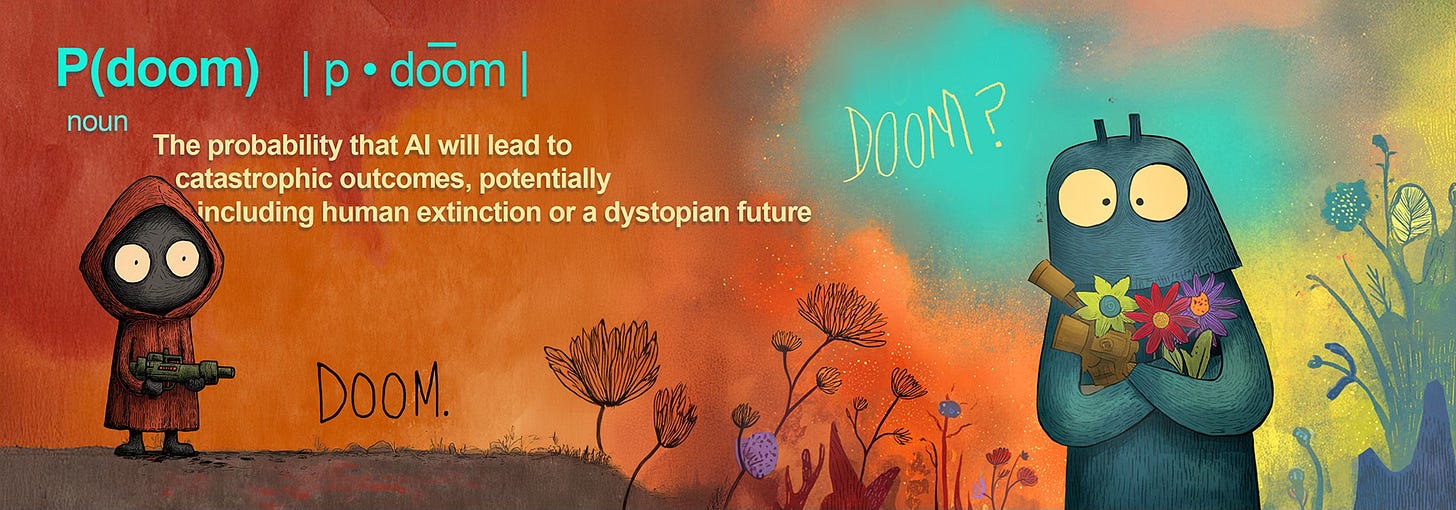

P(doom)

P(doom) is a real thing. It’s a subjective measure that people in the AI world toss around for how likely they think it is that AI will be our doom.

Obviously the beginning of this post was fiction. But how far is it from reality? Is any of that scenario remotely possible?

The answer to that question is complicated and full of huge uncertainties. It’s also super interesting and worth understanding. So top up your tea or coffee, settle into your favorite end of the world bunker, and let’s dive into this insanity together.

Before I go on, a little about why I’m writing about AI:

I've used to teach neurophysiology which has given me a bit of an obsessive interest in how large language models work;

The plot of my next novel includes AI, which is a bit of a spoiler :)

Let’s get down to the juicy, dangerous stuff.

This will be the first in a series of posts to try and cover a number of things including, but not limited to:

Is AI going to kill everyone?

Is there really going to be a “super-intelligence” AI sometime soon?

Is AI conscious? Does it matter if it is?

Will AI destroy the internet and rot your brain?

Will AI really take away most jobs? What about hallucinations?

Can AI be trusted? (Spoiler: AI may sound like us—but it’s not like us at all).

The Large Language Model and Generative AI

Mostly what we’re going to be talking about here are Large Language Models (LLMs). The field of artificial intelligence is huge and includes everything from image recognition to self-piloting fighter jets. Within that huge field is something called generative AI, which includes the models that generate language, voice, sound and images. Large Language Models like ChatGPT, Claude, DeepSeek and Gemini all fall under the category of generative AI, and it is this kind of AI that has people freaking out.

How do LLMs work?

LLMs (and most AI) are based on a type of computer program called a neural network. Neural networks are series of simple equations connected to each other in a way that was originally devised to study how our brains work. I know something about neural networks, because I learned how to build them in grad school when they were still very basic. The kind of models I learned in the 90’s are laughably quaint now. Nevertheless, that basic structure is the foundation of today’s AI chatbots.

Neural networks are basically statistical models. They use statistics to predict things based on data from the real world. Here’s a super simple example: it’s easy to understand how you might use a simple equation to predict a person’s weight based on their age. For example, we know that at the age of four a person will weigh somewhere between 29 and 48 pounds. Of course, there’s a ton of variation because people are different—they have different genes, they eat different things and grow up in different environments. If you somehow managed to figure out all the variables that affect a person’s weight, you could potentially put it all into a statistical model and then make a more accurate prediction.

That is what neural networks do. They take in a lot of information in order to predict something. Large Language models do this too. They try to predict what to say in response to anything you might say to them. In order to do this, they are trained on pretty much everything that has ever been written. The result is an enormously complicated black box that uses statistics to compare anything you might possibly say to it to the unthinkably vast amount of data that it has been trained on. This is very complicated statistics, but it is still statistics. And that is a huge problem.

Why? I’ll dig deeper into that next time, but if you want a preview take an hour and dive into Reddit.

Dig Deeper

I read a lot, and I have tons of references to AI research. As this series of posts heats up, I hope to give you enough references to dig into yourself so you can find your own rabbit hole. I’ll try to give you a mix of articles that are easy to read by a non-tech person and links to actual research articles that can be pretty dense.

Here is the first one, and it is a good one. I’ll use a later post to dive into the question posed by the fictional piece at the top, but this article gets at the issue.