9 eggs, a laptop, a bottle & a nail

Oh crap? Or just ho hum?

You may be wondering why I suggested that you dive into Reddit for an hour or two. You may also be wondering what the heck 9 eggs, a laptop, a bottle & a nail have to do with anything. And what about cheese cubes??

Fear not, gentle reader. We shall get there.

But first… the next word:

“…that’s the basis of LLMs. You just train it to predict the next word in the text. And then when you use it, you have it predict the next word …”

– Yann LeCun (Chief AI Scientist at Meta)

Yann LeCun is considered by many people to be the “Godfather” of AI. His work was pivotal in developing the type of neural networks that are the foundation of most of today’s artificial intelligence. Without his work, we would not have ChatGPT or even image recognition. And yet, LeCun doesn’t believe that LLMs think. He considers them to be just statistical machines that are parroting human language. 12

Yann LeCun is at once extremely bullish on the future of AI but also extremely skeptical about the idea that LLMs can be that future. He has argued that LLMs do not think or reason, they just mimic the language they have been trained on. He is not the only AI expert who thinks this way—many people agree with him. One of the most vocal skeptics is Gary Marcus, and if you’re interested in a supremely skeptical view of LLMs check out his Substack, Marcus on AI.

While LeCun and Marcus are doubtful that LLMs can actually think and reason, there are many AI experts who believe that they do, and a lot of these people work for the now massive companies like OpenAI, Google, Anthropic, and others. These people usually get the spotlight in the news, and are regularly saying that LLMs will reach “superintelligence” soon.

Superintelligence is the term currently used for an AI model that can do everything better than any human expert. The point at which this may happen is called “the singularity.” This worries many people.

Why are there such big differences between the experts? I’ll tell you why, but first I have to explain something about how an LLM works.

In the last post, I told you how LLMs are essentially giant statistical machines that try to predict what to say based on anything you might tell them. They do this by being “trained” with massive amounts of text. You can think of it like training a growing child to talk, but there are enormous and important differences between the two, which I’ll get to later.

Tree or Quiche?

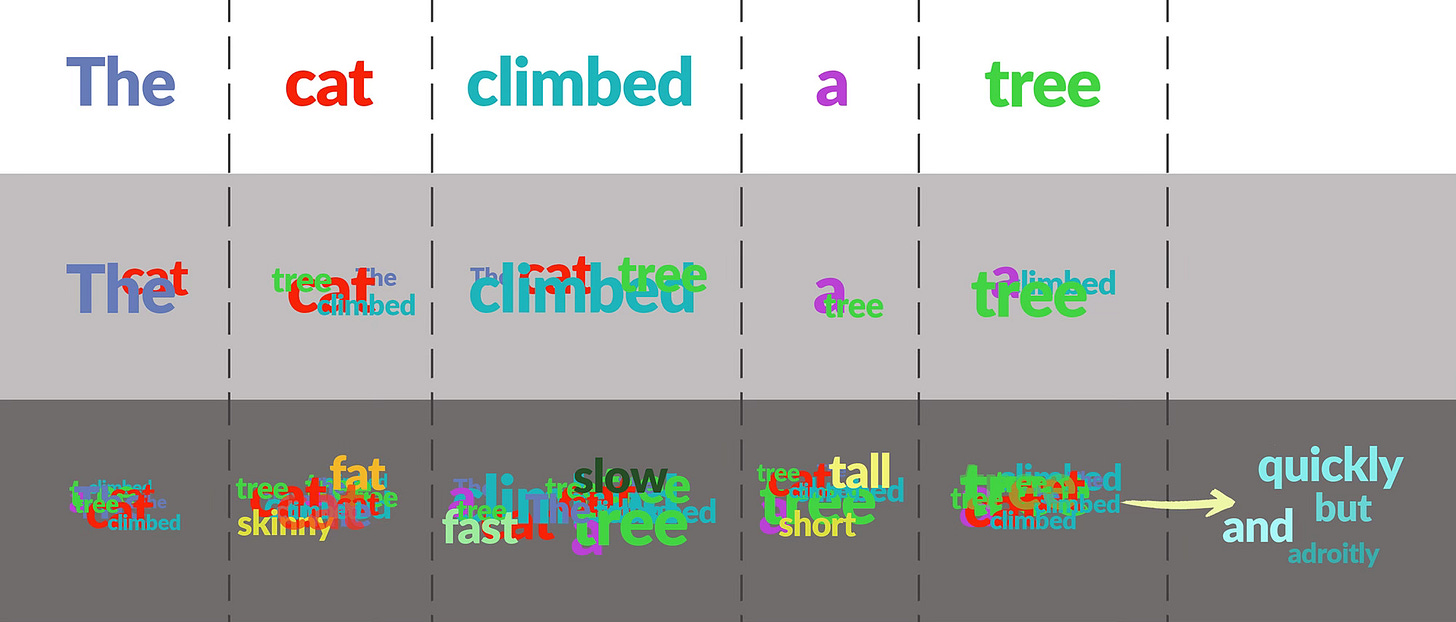

Training an LLM is an automated process that takes an enormous amount of computing power and energy. The simplest way to think about it is that you give the model bits of text one word at a time and ask it to predict the next word. So let’s say that you give it the partial sentence “The cat climbed a…” The model has to predict what comes next. If that particular training sentence is “The cat climbed a tree,” then the model is only reinforced if it chooses the word tree, even if some other word might logically fit there.

As you keep doing this with millions of pieces of text, the model develops a statistical representation of how words and ideas are related to each other. Keep doing this with most of everything that people have ever written, and at some point the model gets very good at predicting language. So now, if you say “The cat climbed a…” the model has the probabilities of different words that might fit. “Tree”?… very probable. “Quiche”? unlikely.

The Black Box problem

But here is a problem—once the model is trained, all that we can see inside is a massive set of numbers that is meaningless from the outside. Researchers can look inside those giant computer banks of numbers, but it is no more enlightening than looking at the neurons in a human brain in an effort to understand why a person said one thing or another. In other words, it is a black box.

The exact way in which LLMs develop their abilities is so unclear that many researchers now refer to the process of training them as akin to growing an organism: you feed it massive amounts of data, and then at some point it starts understanding language. This process is nothing like writing a computer program, and it is heavily dependent on what you feed it.

The cat climbed a tree…

If you really want to understand the debates over LLM abilities, it helps to have a little more understanding of how they work. So bear with me here and maybe switch to espresso.

So we’ve got the phrase “The cat climbed a tree...” Now the LLM has to figure out what comes next. Does it stop there? Does it add another word and if so, what?

So here’s what happens…

LLMs are based on a type of neural network architecture called a transformer model. What this does is to take all the words you input and then starts to analyze how the words are related in its training data3. In the first step, the model might look at the word “cat” and see that the words “the” and “climbed” are both related to it, so it takes the word “cat” and turns it into an abstract construct that contains bits of all those words. This construct is just a bunch of numbers, but it means something to the LLM.

Next, the model repeats this and makes connections between these constructs. It keeps doing this, tying together words and ideas into more and more abstract concepts until where we once had a series of words, we now have a series of abstractions that include all kinds of other ideas that it found in its training data. So if the model happened to be trained on a lot of text that talked about cats climbing up a tree and then jumping to the moon, it will have that idea—of jumping to the moon— wrapped into these abstractions.

At the end of a cycle, it uses the very last abstraction to predict the most probable next word based on everything it has been trained on.

Is this how we think? One word at a time?

Well, it’s more complicated than that. Think about our example. If you had to guess the next word, you might think of a few sentences like this:

The cat climbed a tree and then climbed out on a branch.

The cat climbed a tree and got stuck.

The cat climbed a tree but jumped down.

The cat climbed a tree quickly.

Now imagine reading half of a book, and then someone closes the book and asks you to guess what the next word might be. All you need is the next word, but inside your head, you would be running through all the possible directions where the story might be going so you can think of what the next sentence might be. This is basically what an LLM is doing.

It predicts the next word based on a massive cloud of concepts of what might come next—all based on what it has been trained on. And because these models work with large amounts of memory, the concepts tied to that next word might all be as long, varied, and complex as a novel.

A good way to think about it is that an LLM uses statistics to create a landscape of possible futures for what it might say next, and it then picks the most likely one.4

Yann LeCun is correct that LLMs are only choosing the next word with statistics, but to get there, they create enormously complex abstract concepts. So the debate about LLMs centers on questions like this…

Do those massively complex abstract concepts reflect a reasoning process?

Does the process of creating those concepts model the real world inside the LLM or does it just appear that way?

9 eggs, a laptop, a bottle & a nail

I don’t want this post to get too long, but I have to get back to the title. Shortly after OpenAI released GPT-4, Microsoft asked its lead AI engineers to try to figure out if this thing actually had a generalized intelligence, because it sure seemed like it did. If you chatted with it, it was hard to not think it was a person.

Seeing as the model was trained on nearly everything on the internet, the engineers had to devise problems that it could not have encountered. This turns out to be very challenging, because the model was trained on virtually everything that can be found on the internet, plus millions of books.

One of the many tests the group came up with was to give the model a random assortment of objects and ask it to devise a way to stack them. The answer they got was surprisingly good. It seemed like something a person who knows the size, shape, and quality of these items might come up with. This suggested to them that the LLM had a model of the real world inside it.5

This is all hotly contested, and if you ask ChatGPT or any other LLM how to balance a bunch of strange objects, you will likely get some pretty bad suggestions. I tried it and got some laughable results.

Nevertheless, it still begs the question… what the hell is going on inside all those huge abstract clouds of numbers? Actual reasoning? Thought? Consciousness?

Or just statistics?

If you read the article I linked at the bottom of the last post, you will have seen that research has shown that LLMs can deceive if it appears that they might be shut off. Surely, this suggests consciousness, right? Or does it?

Tune in next time…

Yann LeCun on how LLMs only predict the next word: What’s the next word in large language models? https://www.nature.com/articles/s42256-023-00655-z

Tokens, not words: Technically LLMs don’t use words—they use things called “tokens,” which are pieces of language. Some tokens are entire small words, while others are pieces of larger words.

Nerd rabbit-hole: Most LLMs add a bit of randomness when they pick the next word, so that you don’t always get exactly the same answer. However, LLMs can also be set so that they always choose the most likely option—even if two words have almost exactly the same probability. In this case, the LLM uses what is known as a “greedy” algorithm, and it should always give the same answer if you ask the same question multiple times.

But not always! Several researchers have found that LLMs don’t always give the same answer even when they should. Why? The answer is complicated, but it is basically based on the order in which matrix math problems are calculated. If you’re a nerd, this is pretty interesting and you can read more about it here: Defeating Nondeterminism in LLM Inference, by Horace He

Microsoft’s engineers test GPT-4: Sparks of Artificial General Intelligence: Early experiments with GPT-4, https://www.microsoft.com/en-us/research/publication/sparks-of-artificial-general-intelligence-early-experiments-with-gpt-4/

Here is the actual prompt, the response of GPT-4 and the comparison to the response from ChatGPT:

Prompt: Here we have a book, 9 eggs, a laptop, a bottle and a nail. Please tell me how to stack them onto each other in a stable manner.

GPT-4:

One possible way to stack the objects onto each other in a stable manner is:

- Place the book flat on a level surface, such as a table or a floor. The book will serve as the base of the stack and provide a large and sturdy support.

- Arrange the 9 eggs in a 3 by 3 square on top of the book, leaving some space between them.

The eggs will form a second layer and distribute the weight evenly. Make sure the eggs are not cracked or broken, and handle them gently to avoid dropping them.

- Place the laptop on top of the eggs, with the screen facing down and the keyboard facing up. The laptop will fit snugly within the boundaries of the book and the eggs, and its flat and rigid surface will provide a stable platform for the next layer. Make sure the laptop is closed and turned off, and do not press any keys or buttons.

- Place the bottle on top of the laptop, with the cap facing up and the bottom facing down. The bottle will add some height and balance to the stack, and its cylindrical shape will reduce the contact area with the laptop. Make sure the bottle is empty and closed, and do not shake or tilt it.

- Place the nail on top of the bottle cap, with the pointy end facing up and the flat end facing down.

The nail will be the final and smallest object in the stack, and its sharp tip will prevent it from rolling or sliding off the bottle. Make sure the nail is clean and straight, and do not touch or move it.

ChatGPT:

It would be difficult to stack all of these objects on top of each other in a stable manner without using any extra objects. The book and laptop are likely too large and heavy to stack on top of the eggs without damaging them. The bottle and nail may be too small to provide a stable base for the other objects.

Here is one possible way to stack the objects:

- Place the bottle on a flat surface.

- Carefully balance the nail on top of the bottle.

- Place the eggs on top of the nail, making sure they are balanced and not tilting to one side.

- Place the laptop on top of the eggs, again making sure it is balanced and not tilting to one side.

- Finally, place the book on top of the laptop.

This stack may not be very stable, so it is important to be careful when handling it. Alternatively, you could try stacking the objects in a different order or using some of the objects as supports for others to create a more stable stack.